Method

The problem considered is to generate a trajectory for a robot that yields high-quality 3D models of a bounded

target scene and fulfills robot constraints like time and path length. However, finding

the best sequence of viewpoints is time-consuming and prohibitively expensive. We adopt the

greedy strategy and trade it as a Next-Best-View (NBV)

problem. At each step of the reconstruction, the information

gain of viewpoints within a certain range of the current

position of a robot are evaluated based on the partial reconstruction

scene. An informative path considering both the

information gain and the path length is then planned and

executed. Images captured along the view path are selected

and are fed into the 3D reconstruction of next step. The

process is repeated until some critera are met.

Under the greedy strategy, our pipeline consists of three

components: a Mobile Robot module, a 3D Reconstruction

module and a View Path Planning module and shown as below:

The Mobile Robot module takes the images at given viewpoints

and the robot locates itself by a motion capture system.

During the simulation, Unity Engine renders images at

given viewpoints. The 3D Reconstruction module reconstructs

a scene by combining an implicit neural representation

(e.g. NeRF) and a volumetric representation (A

coarse TSDF). The implicit neural representation provides

high quality 3D models with fine-grained details and also

neural uncertainty as the information gain for the view path

planning. The coarse TSDF filter viewpoints and establishes

an occupancy grid map for efficient distance and occupancy

query, and viewpoint filtering. The View Path Planning

module first leverages volumetric representations for efficient

viewpoint selection, approximates the information gain field

by a MLP and plans an informative view path based the

A* algorithm.

Performance

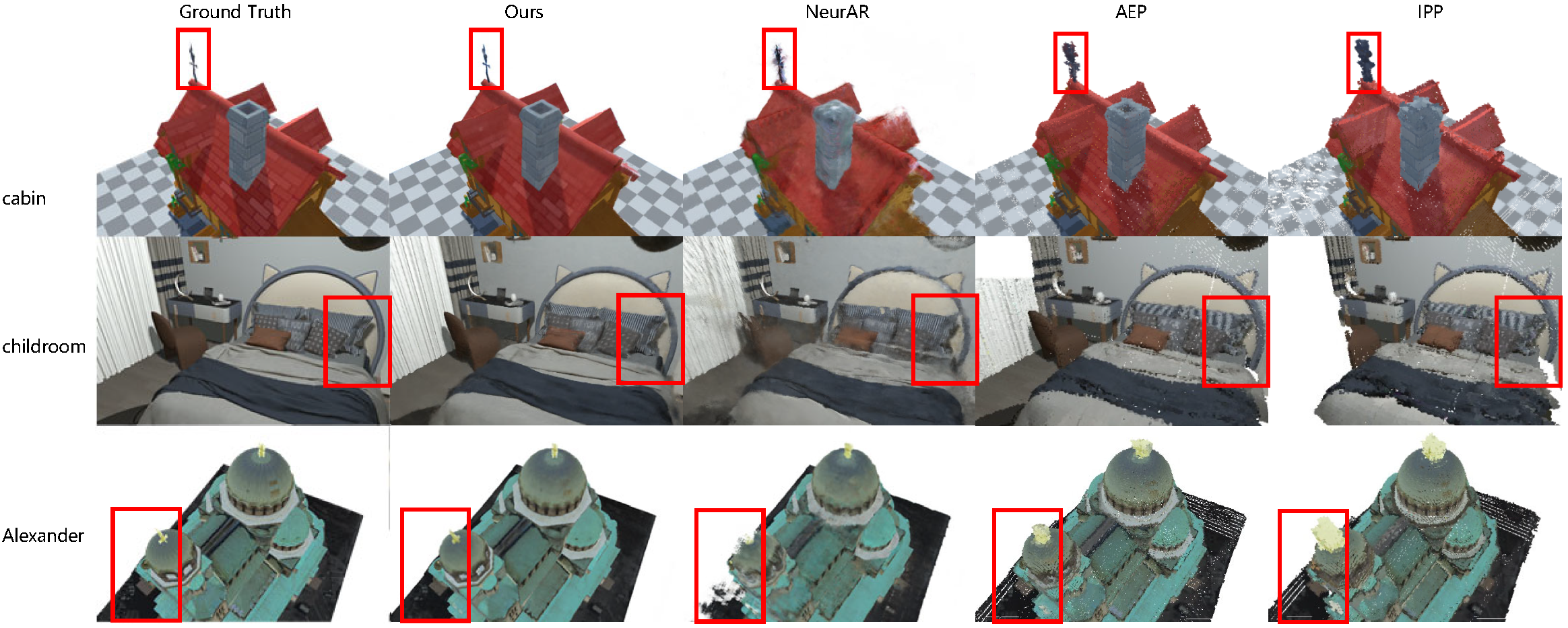

Comparison of the reconstruction scenes

Trajectories and the reconstruction results

The results are seen from top view in childroom scene.

The experiment on a real scene

Related works

Some related works also focus on autonomous implicit reconstruction:

- NeurAR: Neural Uncertainty for Autonomous 3D Reconstruction NeurAR

Bibtex

@inproceedings{zeng2023efficient,

title={Efficient view path planning for autonomous implicit reconstruction},

author={Zeng, Jing and Li, Yanxu and Ran, Yunlong and Li, Shuo and Gao, Fei and Li, Lincheng and He, Shibo and Chen, Jiming and Ye, Qi},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

pages={4063--4069},

year={2023},

organization={IEEE}

}